There is a growing number of online research tools that are having a significant impact on the field of digital humanities. Easy to use, and accessible, these tools or applications are quite powerful in their ability to permit researchers to sift through large data sets and visualize network relationships. For the past several assignments I was able to test and review three popular tools. These include Voyant, Kepler.gl, and Palladio. While each tool was unique in terms of its interface and original purpose, provided a means to an end. This is an important distinction, especially if one was starting a research project and needed to get a handle on whatever data was available.

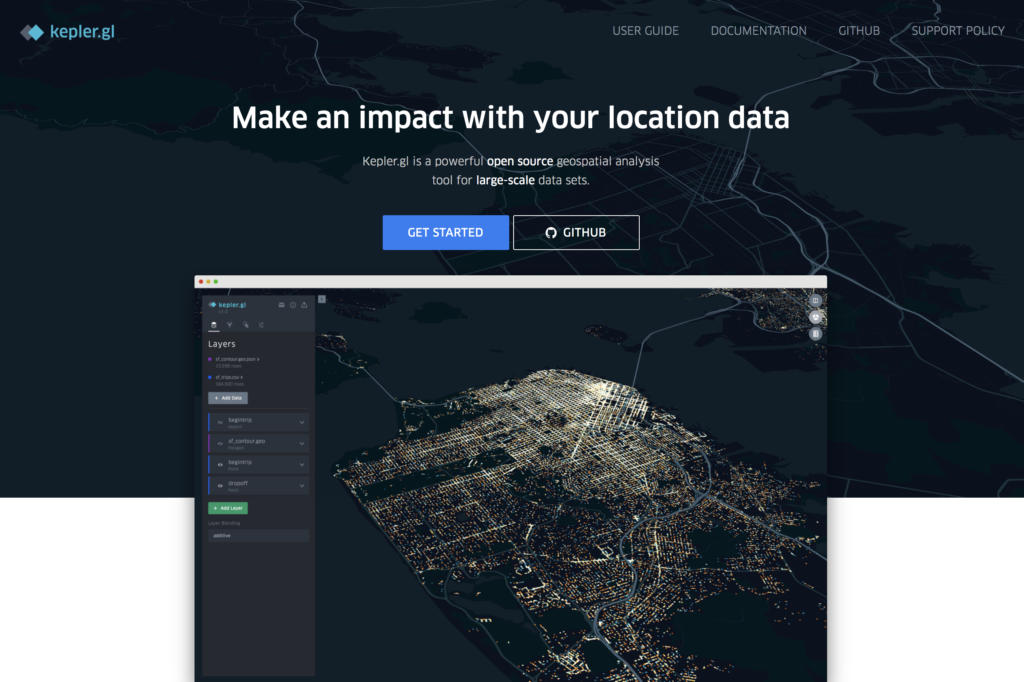

For example, Voyant is a “text mining” application that permits the end user to enter large volumes of corpus and visualize text clouds, or tag clouds. This provides a significant capability if one is not clear as to what the text describes or includes. Kepler provides a very specific feature set that allows end users to enter geospatial data and generate a variety of maps. On the other hand, Palladio is much more robust providing several important features such as mapping, graphing, customized lists, as well as a gallery view for images.

While each application on its own provides value, the real lesson for any DH researcher is to be prepared to utilize a variety of tools to visualize and map data. This requires a level of effort to experiment and test each application’s capabilities. Voyant provides the end user a rather straight forward approach to discovering word patterns or hidden terms. Kepler provides a relatively easy way to present physical location data over time. Finally, Palladio permits the end user to visual patterns of relationships. This becomes an important factor when trying define interdependencies in a humanistic study.

As part of my class assignments using the WPA’s Slave Narratives data, the integration of all three tools would be beneficial in analyzing the 1930s research. Voyant could be used to define text patterns of the questions asked and the subjects responses. Kepler could be used to demonstrate that their was a relationship between the physical location of where the interviews were conducted, versus the location of where the enslaved person was from. Finally, Palladio using mapping, graphing, lists, and image gallery, could provide an acceptable interface to explore the final results.